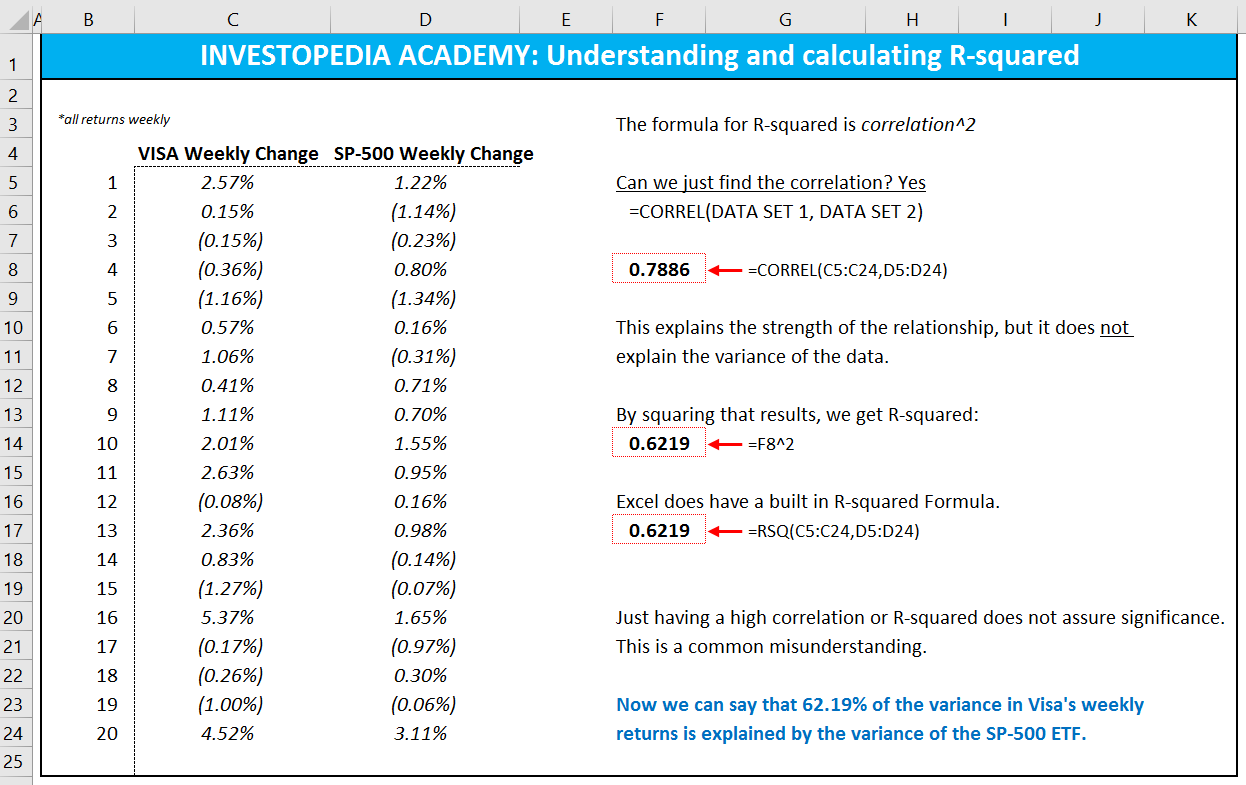

R-squared can be useful in investing and other contexts, where you are trying to determine the extent to which one or more independent variables affect a dependent variable. However, it has limitations that make it less than perfectly predictive. R-squared will give you an estimate of the relationship between movements of a dependent variable based on an independent variable’s movements.

Adjusted R squared

The fitted line plot displays the relationship between semiconductor electron mobility and the natural log of the density for real experimental data. Linear regression calculates an equation that minimizes the distance between the fitted line and all of the data points. Technically, ordinary least squares (OLS) regression minimizes the sum of the squared residuals. This study is a narrative review encompassing expert opinions, results from randomized controlled trials (RCTs), and observational studies relating to the use and interpretation of the coefficient of variance (R2) in clinical medicine. The adjusted R-squared is a modified version of R-squared that adjusts for the number of predictors in a regression model.

Can R-Squared Be Negative?

It ranges from 0 to 1, where 1 indicates a perfect fit of the model to the data. Plotting fitted values by observed values graphically illustrates different R-squared values for regression models. In general you shouldlook at adjusted R-squared rather thanR-squared.

Properties and interpretation

While a high R-squared is required for precise predictions, it’s not sufficient by itself, as we shall see. In some fields, it is entirely expected that your R-squared values will be low. For example, any field that attempts to predict human behavior, such as psychology, typically has R-squared values lower than 50%. Humans are simply harder to predict than, say, physical processes.

However, there are important conditions for this guideline that I’ll talk about both in this post and my next post. When interpreting the R-Squared it is almost always a good idea to plot the data. That is, create a plot of the observed data and the predicted values of the data.

Take context into account

This example illustrates why adjusted R-squared is a better metric to use when comparing the fit of regression models with different numbers of predictor variables. Because of the way it’s calculated, adjusted R-squared can be used to compare the fit of regression models with different numbers of predictor variables. There is one more consideration concerning the removal of variables from a model.

This includes taking the data points (observations) of dependent and independent variables and conducting regression analysis to find the line of best fit, often from a regression model. This regression line helps to visualize the relationship between the variables. From there, you would calculate predicted values, subtract actual values, and square the results. These coefficient estimates and predictions are crucial for understanding the relationship between the variables. This yields a list of errors squared, which is then summed and equals the unexplained variance (or “unexplained variation” in the formula above). If the variable to bepredicted is a time series, it will often be the case that most of thepredictive power is derived from its own history via lags, differences, and/orseasonal adjustment.

The linear regression version runs on both PC’s and Macs andhas a richer and easier-to-use interface and much better designed output thanother add-ins for statistical analysis. It may make a good complement if not asubstitute for whatever regression software you are currently using,Excel-based or otherwise. RegressIt is an excellent tool forinteractive presentations, online teaching of regression, and development ofvideos of examples of regression modeling. It includes extensive built-indocumentation and pop-up teaching notes as well as some novel features tosupport systematic grading and auditing of student work on a large scale. Thereis a separate logisticregression version withhighly interactive tables and charts that runs on PC’s.

Essentially, his job was to design the appropriate research conditions, accurately generate a vast sea of measurements, and then pull out patterns and meanings from it. Essentially, R-squared is a statistical analysis technique for the practical use and trustworthiness of betas of securities. But, consider a model that predicts tomorrow’s exchange rate and has an R-Squared of 0.01.

- These residuals lookquite random to the naked eye, but they actually exhibit negative autocorrelation, i.e., a tendency to alternate betweenoverprediction and underprediction from one month to the next.

- If the beta is also high, it may produce higher returns than the benchmark, particularly in bull markets.

- As a consequence, we estimate and with the adjusted sample variances and , which are unbiased estimators.

- However, this may still account for less than 50% of the variability of income.

- Some of these concern the “practical” upper bounds for R² (your noise ceiling), and its literal interpretation as a relative, rather than absolute measure of fit compared to the mean model.

For more information about how a high R-squared is not always good a thing, read my post Five Reasons Why Your how do you interpret r squared R-squared Can Be Too High. The R-squared in your output is a biased estimate of the population R-squared.